#template metaprogramming

Explore tagged Tumblr posts

Text

C++ / TMP - Polimorfismo estatico

Hoy les traigo otra etapa mas de la metaprogramacion de template. Espero les sea de utilidad!

Bienvenidos sean a este post, hoy veremos una de las etapas de TMP. En este post hablamos sobre polimorfismo, y podemos resumirlo como multiples funciones bajo el mismo nombre. Siendo que el polimorfismo dinamico nos permite determinar la funcion actual que usaremos al momento de ejecucion. En cambio, el polimorfirmo estatico solo sabra la funcion actual que debe correr al momento de la…

0 notes

Text

"You want to see something gross?" "YES!!!! *long pause* ewwwwww....."

did i hear this conversation between:

(1) two lil kiddos on the playground marveling over a dead bug, or

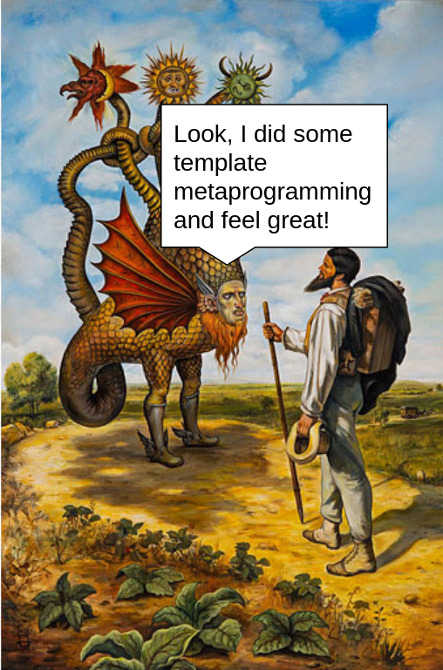

(2) two horrifyingly skilled c++ programmers, one of whom has just sinned against God personally via template metaprogramming & the other who is encouraging that nonsense

44 notes

·

View notes

Text

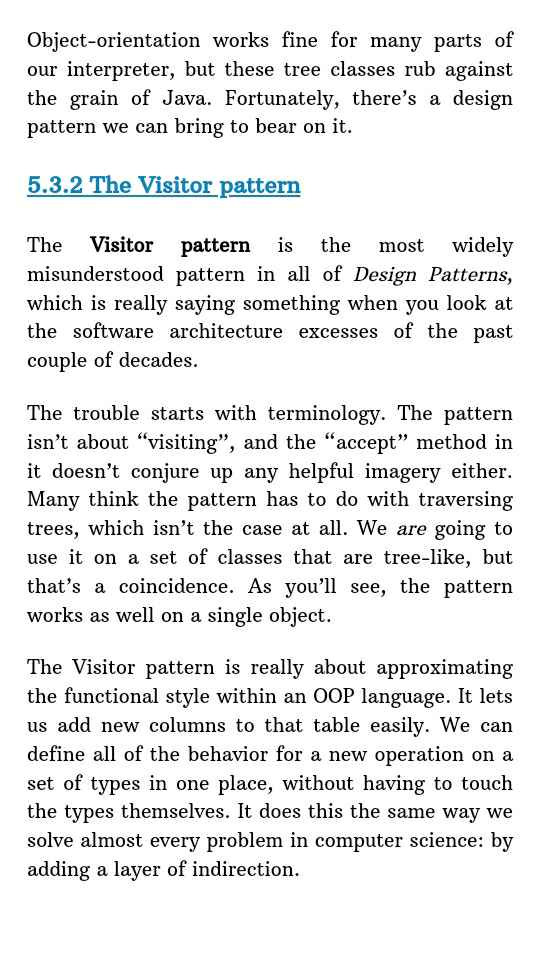

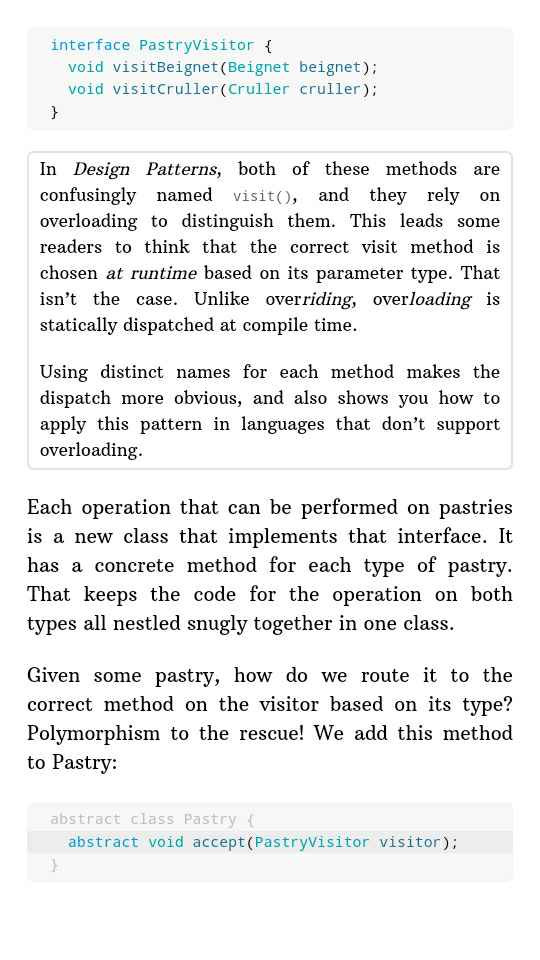

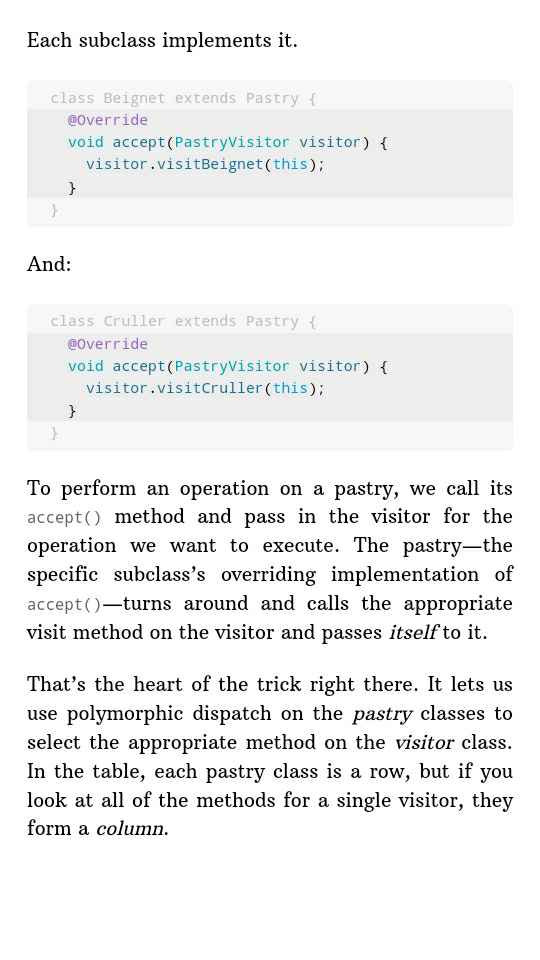

related to my ill-advised godot stunts, i feel like this is maybe the worst technical writing i've ever seen.

admittedly i don't read a lot of technical writing? but like. a visitor pattern is already something that is deeply situational and difficult to contextualize, and using a pastry metaphor because you're clinging to it as a gimmick for your book is like. not helpful at all. it's insane.

and to really fucking cap it off, this is being explained as a part of metaprogramming to template and automatically generate a bunch of classes to parse expressions. so you're not even writing the pattern, you're writing code that writes the pattern, so you don't get hands on with it. and reading this while experiencing the symptoms of starting spiro and at the end of a long day like. it fucking broke me.

(it's from crafting interpreters by robert nystrom, an otherwise very good book on the matter thus far)

7 notes

·

View notes

Note

5 best things about C++ that aren't constexpr

5 worst things about C++

Best compared to what though

You always gotta look at what the competition forgot to bring.

--- Best:

RAII/Deterministic destruction

const

templates and template metaprogramming (in the interest of fairness to other languages that cannot pretend to compete I will make this one item)

perf

C Interop

--- Meh:

C Interop

--- Worst:

C interop

ABI back compat is killing me slowly

UB, especially UB NDR fuck NDR all my homies hate NDR

the "noexcept" specifier is hot garbage and yet it could have been so good

the build system

the build system

the build system

the build system

the build system

the build system

the build system

the build system

18 notes

·

View notes

Text

#codetober 2023. 10. 05.

So the question arises:

propaganda:

C++: my strong suit, will make progress quicker, kinda know what to do next. Too familiar tbh.

Rust: the perfect opportunity to finally try it in a medium sized project, building up the knowledge in the go. Probably will be very slow in the beginning, might discourage me.

Today's goal is coming up some basic design (language independent) on the whiteboard to see the connections and interfaces I might need.

I want to decouple the "business logic" from the visualisation as a design principle. Also want to keep things simple and performant.

What's your favorite programming language? Why?

Easy question: C++

The expressiveness of the template metaprogramming is unparalleled. No other language I know of can do the same with the same flexibility. I recently wrote a Tower of Hanoi solver that creates the steps for the solution in compile time (and gives the compiler error message of the solution to prove it is done before anything is ever ran). It even has an addition to generate the steps and the states it goes through as ASCII art at compile time using TMP. And this is not even that advanced usage, just a little toy example.

It's somewhat a love-hate relationship.

18 notes

·

View notes

Text

C++ Quiz Challenge: Test Your Programming Prowess! Are you confident in your C++ programming skills? Whether you're preparing for a technical interview or simply want to test your knowledge, this comprehensive quiz will challenge your understanding of essential C++ concepts. Let's dive into ten carefully crafted questions that every developer should be able to answer. 1. Smart Pointer Fundamentals Consider this code snippet: cppCopystd::unique_ptr ptr1(new int(42)); std::unique_ptr ptr2 = ptr1; What happens when you try to compile and execute this code? More importantly, why? The above code will fail to compile. This exemplifies one of the fundamental principles of unique_ptr: it cannot be copied, only moved. This restriction ensures single ownership semantics, preventing multiple pointers from managing the same resource. To transfer ownership, you would need to use std::move: cppCopystd::unique_ptr ptr2 = std::move(ptr1); 2. The Virtual Destructor Puzzle Let's examine this inheritance scenario: cppCopyclass Base public: ~Base() ; class Derived : public Base public: Derived() resource = new int[1000]; ~Derived() delete[] resource; private: int* resource; ; int main() Base* ptr = new Derived(); delete ptr; Is there a potential issue here? If so, what's the solution? This code contains a subtle but dangerous memory leak. When we delete through a base class pointer, if the destructor isn't virtual, only the base class destructor is called. The solution is to declare the base class destructor as virtual: cppCopyvirtual ~Base() 3. Template Metaprogramming Challenge Can you spot what's unique about this factorial implementation? cppCopytemplate struct Factorial static const unsigned int value = N * Factorial::value; ; template struct Factorial static const unsigned int value = 1; ; This demonstrates compile-time computation using template metaprogramming. The factorial is calculated during compilation, not at runtime, resulting in zero runtime overhead. This technique is particularly useful for optimizing performance-critical code. 4. The const Conundrum What's the difference between these declarations? cppCopyconst int* ptr1; int const* ptr2; int* const ptr3; This question tests understanding of const placement: const int* and int const* are equivalent: pointer to a constant integer int* const is different: constant pointer to an integer Remember: read from right to left, const applies to what's immediately to its left (or right if nothing's to the left). 5. Rule of Five Implementation Modern C++ emphasizes the "Rule of Five." What five special member functions should you consider implementing? The Rule of Five states that if you implement any of these, you typically need all five: Destructor Copy constructor Copy assignment operator Move constructor Move assignment operator Here's a practical example: cppCopyclass ResourceManager public: ~ResourceManager(); // Destructor ResourceManager(const ResourceManager&); // Copy constructor ResourceManager& operator=(const ResourceManager&); // Copy assignment ResourceManager(ResourceManager&&) noexcept; // Move constructor ResourceManager& operator=(ResourceManager&&) noexcept; // Move assignment ; 6. Lambda Expression Mastery What will this code output? cppCopyint multiplier = 10; auto lambda = [multiplier](int x) return x * multiplier; ; multiplier = 20; std::cout

0 notes

Text

C++ for Advanced Developers

C++ is a high-performance programming language known for its speed, flexibility, and depth. While beginners focus on syntax and basic constructs, advanced developers harness C++'s full potential by leveraging its powerful features like templates, memory management, and concurrency. In this post, we’ll explore the key areas that elevate your C++ skills to an expert level.

1. Mastering Templates and Meta-Programming

Templates are one of the most powerful features in C++. They allow you to write generic and reusable code. Advanced template usage includes:

Template Specialization

Variadic Templates (C++11 and beyond)

Template Metaprogramming using constexpr and if constexpr

template<typename T> void print(T value) { std::cout << value << std::endl; } // Variadic template example template<typename T, typename... Args> void printAll(T first, Args... args) { std::cout << first << " "; printAll(args...); }

2. Smart Pointers and RAII

Manual memory management is error-prone. Modern C++ introduces smart pointers such as:

std::unique_ptr: Exclusive ownership

std::shared_ptr: Shared ownership

std::weak_ptr: Non-owning references

RAII (Resource Acquisition Is Initialization) ensures that resources are released when objects go out of scope.#include <memory> void example() { std::unique_ptr<int> ptr = std::make_unique<int>(10); std::cout << *ptr << std::endl; }

3. Move Semantics and Rvalue References

To avoid unnecessary copying, modern C++ allows you to move resources using move semantics and rvalue references.

Use T&& to denote rvalue references

Implement move constructors and move assignment operators

class MyClass { std::vector<int> data; public: MyClass(std::vector<int>&& d) : data(std::move(d)) {} };

4. Concurrency and Multithreading

Modern C++ supports multithreading with the `<thread>` library. Key features include:

std::thread for spawning threads

std::mutex and std::lock_guard for synchronization

std::async and std::future for asynchronous tasks

#include <thread> void task() { std::cout << "Running in a thread" << std::endl; } int main() { std::thread t(task); t.join(); }

5. Lambda Expressions and Functional Programming

Lambdas make your code more concise and functional. You can capture variables by value or reference, and pass lambdas to algorithms.std::vector<int> nums = {1, 2, 3, 4}; std::for_each(nums.begin(), nums.end(), [](int x) { std::cout << x * x << " "; });

6. STL Mastery

The Standard Template Library (STL) provides containers and algorithms. Advanced usage includes:

Custom comparators with std::set or std::priority_queue

Using std::map, std::unordered_map, std::deque, etc.

Range-based algorithms (C++20: ranges::)

7. Best Practices for Large Codebases

Use header files responsibly to avoid multiple inclusions.

Follow the Rule of Five (or Zero) when implementing constructors and destructors.

Enable warnings and use static analysis tools.

Write unit tests and benchmarks to maintain code quality and performance.

Conclusion

Advanced C++ programming unlocks powerful capabilities for performance-critical applications. By mastering templates, smart pointers, multithreading, move semantics, and STL, you can write cleaner, faster, and more maintainable code. Keep experimenting with modern features from C++11 to C++20 and beyond — and never stop exploring!

0 notes

Text

Template Metaprogramming in C++ Guide

1. Introduction Brief Explanation Template Metaprogramming is a powerful feature in C++ that allows for generic programming and code generation at compile-time. It enables developers to write code that can manipulate and generate other code, leading to highly flexible and efficient solutions. Importance Enhances code reusability and maintainability. Facilitates writing generic code that works…

0 notes

Text

Exploring Template for Metaprogramming in Haskell Programming

Unlocking the Power of Template Haskell: Metaprogramming Techniques Explained Hello, fellow Haskell enthusiasts! In this blog post, I will introduce you to Template Haskell Metaprogramming – one of the most powerful and fascinating features in Haskell programming: Template Haskell. Template Haskell allows you to write code that generates and manipulates other Haskell code at compile time. This…

0 notes

Text

Which topic are taught under c++ course

A C++ course typically covers a range of topics designed to introduce students to the fundamentals of programming, as well as more advanced concepts specific to C++. Here's a breakdown of common topics:

1. Introduction to Programming Concepts

Basic Programming Concepts: Variables, data types, operators, expressions.

Input/Output: Using cin, cout, file handling.

Control Structures: Conditional statements (if, else, switch), loops (for, while, do-while).

2. C++ Syntax and Structure

Functions: Definition, declaration, parameters, return types, recursion.

Scope and Lifetime: Local and global variables, static variables.

Preprocessor Directives: #include, #define, macros.

3. Data Structures

Arrays: Single and multi-dimensional arrays, array manipulation.

Strings: C-style strings, string handling functions, the std::string class.

Pointers: Pointer basics, pointer arithmetic, pointers to functions, pointers and arrays.

Dynamic Memory Allocation: new and delete operators, dynamic arrays.

4. Object-Oriented Programming (OOP)

Classes and Objects: Definition, instantiation, access specifiers, member functions.

Constructors and Destructors: Initialization of objects, object cleanup.

Inheritance: Base and derived classes, single and multiple inheritance, access control.

Polymorphism: Function overloading, operator overloading, virtual functions, abstract classes, and interfaces.

Encapsulation: Use of private and public members, getter and setter functions.

Friend Functions and Classes: Use and purpose of friend keyword.

5. Advanced Topics

Templates: Function templates, class templates, template specialization.

Standard Template Library (STL): Vectors, lists, maps, sets, iterators, algorithms.

Exception Handling: try, catch, throw, custom exceptions.

File Handling: Reading from and writing to files using fstream, handling binary files.

Namespaces: Creating and using namespaces, the std namespace.

6. Memory Management

Dynamic Allocation: Managing memory with new and delete.

Smart Pointers: std::unique_ptr, std::shared_ptr, std::weak_ptr.

Memory Leaks and Debugging: Identifying and preventing memory leaks.

7. Algorithms and Problem Solving

Searching and Sorting Algorithms: Linear search, binary search, bubble sort, selection sort, insertion sort.

Recursion: Concepts and use-cases of recursive algorithms.

Data Structures: Linked lists, stacks, queues, trees, and graphs.

8. Multithreading and Concurrency

Thread Basics: Creating and managing threads.

Synchronization: Mutexes, locks, condition variables.

Concurrency Issues: Deadlocks, race conditions.

9. Best Practices and Coding Standards

Code Style: Naming conventions, commenting, formatting.

Optimization Techniques: Efficient coding practices, understanding compiler optimizations.

Design Patterns: Common patterns like Singleton, Factory, Observer.

10. Project Work and Applications

Building Applications: Developing simple to complex applications using C++.

Debugging and Testing: Using debugging tools, writing test cases.

Version Control: Introduction to version control systems like Git.

This comprehensive set of topics equips students with the skills needed to develop efficient, robust, and scalable software using C++. Depending on the course level, additional advanced topics like metaprogramming, networking, or game development might also be covered.

C++ course in chennai

Web designing course in chennai

full stack course in chennai

0 notes

Text

C++ / TMP - Optimizacion de codigo en compilacion

Hoy les traigo una nueva etapa del TMP, Metaprogramacion de Template, pero esta vez para la compilacion. Espero les sea de utilidad!

Bienvenidos sean a este post, hoy veremos una de las etapas de TMP En el post anterior, vimos como se puede calcular el factorial de un valor entero en el momento de la compilacion. En este post nos centraremos en analizar como utilizar un bucle en la ejecucion para poder desenrrollar los productos escalares de dos vectores con una longitud n. Siendo que n sera un valor conocido al momento de…

0 notes

Text

C++ Programming Assignment Help

C++ Programming Assignment Help to support you in achieving the best grades. It can be intimidating, especially for those who are unfamiliar with the nuances of this potent language. Do not worry; we are here to assist you in navigating the confusing world of pointers, classes, and templates in your C++ programming project. With a wealth of experience, our knowledgeable staff of experts is prepared to help you grasp C++ ideas and tackle difficult assignments. We cover everything from fundamental syntax to complex subjects like multithreading and template metaprogramming. Say goodbye to sleepless nights and hello to top-notch scores. Allow our assistance with C++ programming assignments to serve as your guide in the realm of exceptional coding.

0 notes

Text

https://gradespire.com/c-programming-assignment-help/

C++ Programming Assignment Help to support you in achieving the best grades. It can be intimidating, especially for those who are unfamiliar with the nuances of this potent language. Do not worry; we are here to assist you in navigating the confusing world of pointers, classes, and templates in your C++ programming project. With a wealth of experience, our knowledgeable staff of experts is prepared to help you grasp C++ ideas and tackle difficult assignments. We cover everything from fundamental syntax to complex subjects like multithreading and template metaprogramming. Say goodbye to sleepless nights and hello to top-notch scores. Allow our assistance with C++ programming assignments to serve as your guide in the realm of exceptional coding.

0 notes

Text

Intel FPGAs speed up databases with oneAPI and SIMD orders

A cutting-edge strategy for improving single-threaded CPU speed is Single Instruction Multiple Data (SIMD).

FPGAs are known for high-performance computing via customizing circuits for algorithms. Their tailored and optimized hardware accelerates difficult computations.

SIMD and FPGAs seem unrelated, yet this blog article will demonstrate their compatibility. By enabling data parallel processing, FPGAs can boost processing performance with SIMD. For many computationally intensive activities, FPGA adaptability and SIMD efficiency are appealing.

High-performance SIMDified programming

SIMD parallel processing applies a single instruction to numerous data objects. Special hardware extensions can execute the same instruction on several data objects simultaneously.

SIMDified processing uses data independence to boost software application performance by rewriting application code to use SIMD instructions extensively.

Key advantages of SIMDified processing include:

Increased performance: SIMDified processing boosts computationally intensive software applications.

Integrability: Intrinsics and dedicated data types make SIMDified processing desirable.

SIMDified processing is available on many current processors, giving it a viable option for computational speed improvement.

Despite its benefits, SIMDified processing is not ideal for many applications. Applications with minimal data parallelism will not benefit from SIMDified processing. It is a convincing method for improving data-intensive software applications.

SIMD Portability Supports Heterogeneity

SIMD registers and instructions make up SIMD instruction sets. SIMD intrinsics in C/C++ are the best low-level programming method for performance.

Low-level programming in heterogeneous settings with different hardware platforms, operating systems, architectures, and technologies is difficult due to hardware capabilities, data parallelism, and naming standards.

Specialized implementations limit portability between platforms, hence SIMD abstraction libraries provide a common SIMD interface and abstract SIMD functions. These libraries use C++ template metaprogramming and function template specializations to translate to SIMD intrinsics and potential compensations for missing functions, which must be implemented.

C/C++ libraries let developers construct SIMD-hardware-oblivious application code and SIMD extension code with minimum overhead. Separating SIMD-hardware-oblivious code with a SIMD abstraction library simplifies both sides.

This method has promoted many SIMD libraries and abstraction layers to solve problems:

Examples of SIMD libraries

Google Highway (open-source)

Xsimd (C++ wrapper for SIMD instances)

Such libraries allow SIMDified code to be designed once and specialized for the target SIMD platform by the SIMD abstraction library. Libraries and varied design environments suit SIMD instructions and abstraction.

Accelerating with FPGAs

FPGAs speed software at low cost and power. Traditional FPGAs required a strong understanding of digital design concepts and specific languages like VHDL or Verilog. FPGA-based solutions are harder to access and more specialized than CPU or GPU-based computing platforms due to programming complexity and code portability. Intel oneAPI changes this.

Intel oneAPI is a software development kit that unifies CPU, GPU, and FPGA programming. It supports C++, Fortran, Python, and Data Parallel C++ (DPC++) for heterogeneous computing to improve performance, productivity, and development time.

Since Intel oneAPI can target FPGAs from SYCL/C++, software developers are increasingly interested in using them for data processing. FPGAs can be used with SIMDified applications by adding them as a backend to the SIMD abstraction library. This allows SIMD applications with FPGAs.

SIMD and FPGAs go together Annotations let the Intel DPC++ compiler synthesis C++ code into circuits and auto-vectorize data-parallel processing. Annotating and implementing code arrays as registers on an FPGA removes data access constraints and allows parallel processing from sink to source. This enables SIMD performance acceleration using FPGAs straightforward and configurable.

SIMD abstraction libraries are a logical choice for FPGA SIMD processing. As noted, the libraries support Intel and ARM SIMD instruction set extensions. TSL abstraction library simplifies FPGA SIMD instruction implementation in the following example. The scalar code specifies loading registers, and the pragma unroll attribute tells the DPC++ Compiler to implement all pathways in parallel in the generic element-wise addition example below.

This simple element-wise example has no dependencies, and comparable implementations will work for SIMD instructions like scatter, gather, and store. Optimization can also accelerate complex instructions.

A horizontal reduction requires a compile-time adder tree of depth ld(N), where N is the number of entries. Unroll pragmas with compile-time constants can implement adder trees in a scalable manner, as shown in the following code example.

Software that calls a library of comparable SIMD components can expedite SIMD instructions on Intel FPGAs by adding the examples above.

Intel FPGA Board Support Package adds system benefits. Intel FPGAs use a BSP to describe hardware interfaces and offer a kernel shell.

The BSP enables SYCL Universal Shared Memory (USM), which frees the CPU from data transfer management by exchanging data directly with the accelerator. FPGAs can be coprocessors.

The pre-compiled BSP generates only kernel logic live, reducing runtime.

Intel FPGAs are ideal for SIMD and streaming applications like current composable databases because to their C++/SYCL compatibility, CPU data transfer offloading, and pre-compiled BSPs.

SIMD/FPGA simplicity At SiMoDSIGMOD 2023 in Seattle, USA, Dirk Habich, Alexander Krause, Johannes Pietrzyk, and Wolfgang Lehner of TU Dresden presented their paper “Simplicity done right for SIMDified query processing on CPU and FPGA” on using FPGAs to accelerate SIMD instructions. The work, supported by Intel’s Christian Färber, illustrates how practical and efficient developing a SIMDified kernel in an FPGA is while achieving top performance.

The paper evaluated FPGA acceleration of SIMD instructions using a dual-socket 3rd-generation Intel Xeon Scalable processor (code-named “Ice Lake”) with 36 cores and a base frequency of 2.2 GHz and a BitWare IA-840f acceleration card with an Intel Agilex 7 AGF027 FPGA and 4x 16 GB DDR4 memories.

First, they gradually increased the SIMD instance register width to see how it affected maximum acceleration bandwidth. The first instance, a simple aggregation, revealed that the FPGA accelerator’s bandwidth improves with data width doubling until the global bandwidth saturates an ideal acceleration case.

The second scenario, a filter-count kernel with a data dependency in the last stage of the adder tree, demonstrated similar behavior but saturates earlier at the PCIe link width. Both scenarios demonstrate the considerable speeding gains of natively parallel instructions on a highly parallel architecture and suggest that wide memory accesses could sustain the benefits.

Final performance comparisons compared the FPGA and CPU. CPU and FPGA received the same multi-threaded AVX512-based filter-count kernel. As expected, per-core CPU bandwidth decreased as thread count and CPU core count grew. FPGA performance was peak across all workloads.

Based on this work, the TU Dresden and Intel team researched how to use TSL to use an FPGA as a bespoke SIMD processor.

Read more on Govidhtech.com

0 notes

Text

Little guys don't have turing-complete template metaprogramming.

You would not believe the lengths I will go to avoid using C++, including for things that are explicitly supposed to be done in C++

1K notes

·

View notes

Text

image credit

10 notes

·

View notes